This project of Artificial Intelligence Group at University of Bielefeld demonstrates classic 3D user interaction techniques for navigation/travel, selection and manipulation applied to a virtual supermarket scenario. The implementations, a user study and the video have been done by our student group “Interaction in Virtual Reality” in fall/winter 2009/2010 for a video submission to the Grand Price contest of the 3D User Interfaces conference in 2010.

Archive for January, 2010

Classic 3D User Interaction Techniques for Immersive Virtual Reality Revisited

Sunday, January 31st, 2010EADS TouchLab

Saturday, January 30th, 2010The EADS TouchLab is an outstanding multimedia installation realized and developed by the agency NewMedia Yuppies GmbH. The table is placed within a multimedia environment and used as a next generation simulation and presentation tool for the client EADS. Together with the cooperation partner Fraunhofer IGD in Darmstadt, NMY brought an advanced system on track, which enables the user to fully interact within a 3D space in realtime using multitouch gestures. The installation is based on the InstantReality framework.

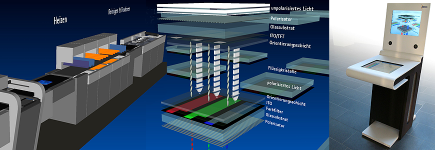

Display Explorer

Friday, January 29th, 2010Interactive kiosk for Merck Darmstadt informing about the behaviour of liquid crystals in flat screens through a realtime 3D-simulation. Ten custom designed portable terminals with touch-screen and 20″-auto-stereoscopic display for trade shows and permanent exhibitions worldwide. The 3D-scene is rendered and animated with instantplayer and controlled by a Flash-Application via TCP/IP-Socket. Concept, terminal design and 3D-application programming by Invirt GmbH for CAPCom AG. Methods for driving the Philips-3D-Display and a GLSL-Shader for the simulation of an etching process were developed by Fraunhofer IGD.

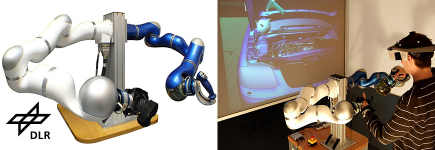

Haptic Rendering for Virtual Reality Simulations

Thursday, January 28th, 2010Interactive VR simulations consist of visual and haptic feedback. The haptic signals are displayed to a human operator through a haptic device at a rate of 1kHz. The research of the DLR institute of robotics and mechatronics on this project comprises design and control of light-weight robots for haptic interaction, as well as volume based haptic rendering. Typical applications are assembly simulations, training of mechanics, and skill transfer.

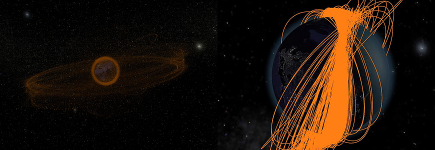

Space Debris Visualization

Wednesday, January 27th, 2010With more and more man-made objects orbiting the Earth – most of them junk and high velocity debris – space travel and maintaining satellite orbits is becoming increasingly problematic. To illustrate the dangers of the more than 12000 tracked (and 600000 estimated) objects, ESA uses InstantReality to visualize the distribution and temporal variation of these objects. The results can be viewed either in real time, but they have also been compiled into an informative video, which is available at the ESOC in Darmstadt.

Augmented Reality Sightseeing

Tuesday, January 26th, 2010At CeBIT 2009 Fraunhofer IGD presented Augmented Reality technology for assistant living using the example of Berlin. Center of the project was a table with a satellite image of Berlin on which a 3D model of the Berlin Wall and the urban development from 1940 – 2008 are displayed. Therefore urban grain plans showing areas covered with buildings is augmented on the satellite image. The visualization was presented on UMPCs and the iPhone via video seethrough.

Furthermore posters showed the system working outdoors. Historic photographs are seamless superimposed and showing the development of landmarks. The interface is kept very simple by fading in information and overlays context and location-sensitive.

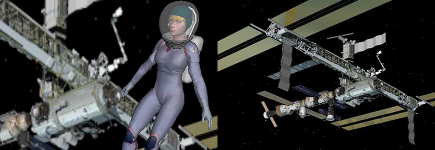

Educational 3D Visualization of Astronaut Motion in Microgravity

Monday, January 25th, 2010In this joint project of the MIT Department of Aeronautics and Astronautics and the MIT Office of Educational Innovation and Technology, researchers collaborated with visual artists to create three-dimensional visualizations of astronaut motion in microgravity. Through the graphical user interface, which is implemented in X3D, students can interactively explore how astronauts rotate in a microgravity environment without using any external torques, i.e. without contact from the surroundings. These rotations are simulated with results from recent research in astronautics [Stirling, Ph.D. thesis, 2008] using mathematical algorithms that implement core curriculum concepts, such as conservation of linear and angular momentum. The computer character, which was modeled and texture-mapped in Maya®, wears a next generation astronaut space suit which MIT researchers develop for NASA.

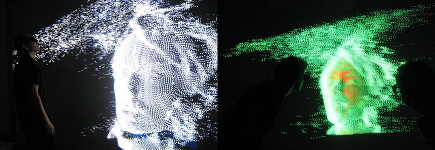

Radiohead’s ‘House of Cards’

Sunday, January 24th, 2010Radiohead released the 3d data of their video “House of Cards” under the Creative Commons license. We wrote a converter script that creates an animated ParticleSet from the csv point data. The result is a real-time rendered music video you can walk through. The new Heyewall 2.0 renders the data on a 8160 x 4000 pixel resolution.

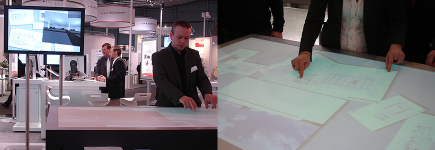

Multi-Touch 3D Architecture Application at CeBIT 2008

Saturday, January 23rd, 2010IGD’s multi-touch table application for visualization of 3D architectural models presented at CeBIT 2008. It features several scalable architectural 2D plans. Multiple users can move and zoom these plans like you know it from other multi-touch applications.

But the key feature is the tile with a high quality 3D view of the building. We implemented the multi-touch 3D camera gestures we introduced last year: You are grabbing a plan with the left hand. One finger of right hand moves the camera through the 3D model. The second finger defines the orientation of the camera. This enables incredible cinematic camera movements in 3D. When the second finger points on a certain object on the plan and the first finger moves around it the 3D camera moves around that object while keeping it on focus.

iTACITUS

Friday, January 22nd, 2010iTACITUS is a sixth framework programme project and aims to privide a mobile cultural heritage information system for the individual. By combining itinerary planning, navigation and rich content on-site information, based upon a dispersed repository of historical and cultural resources it enables a complete information system and media experience for historical interested travellers. IGD’s part is the development of a “Mobile Augmented Reality (AR) Guide Framework” for Cultural Heritage (CH) sites. The framework delivers advanced

markerless tracking on mobile computers as well as new interaction paradigms in AR featuring touch and motion capabilities. In addition to visual components like annotated landscapes and superimposed environments the framework will feature a reactive accoustic AR module.