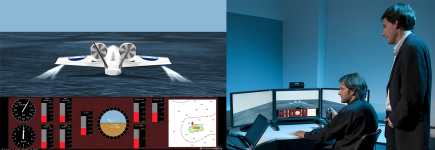

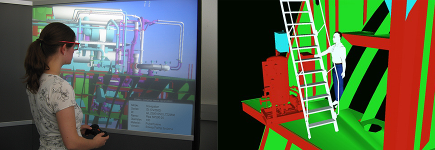

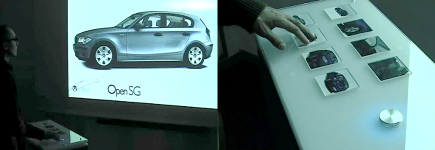

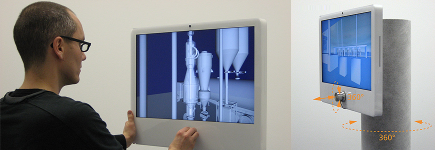

Fraunhofer Austria developed an optimized 3D stereoscopic display based on parallax barriers for a driving simulator. The simulator is built and run by the Institute of Automotive Engineering at the Graz University of Technology. The overall purpose of the simulator is to enable user studies in a reproducible environment under controlled conditions to test and evaluate advanced driver assistance systems.

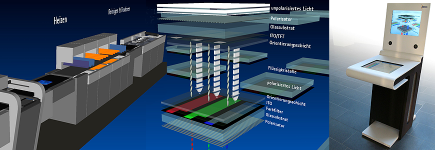

The Simulator consists of a modified MINI Countryman chassis with eight liquid-crystal displays (LCDs) mounted around windscreen and front side windows. Four 55inch LCDs are placed radially around the hood of the car in a slanted angle. Two 23inch LCDs are used for each of the two front side windows. The four LCDs in the front are equipped with parallax barriers made of 2cm thick acrylic glass to minimize strain caused by the slanted angle. Each barrier is printed with a custom-made striped pattern, which is the result of an optimization process. The displays are connected to a cluster of four “standard” computers with powerful graphics cards. Instant Reality is used for rendering.

The project “MueGen Driving” is funded by the Austrian Ministry for Transport, Innovation and Technology and the Austrian Research Agency in the FEMtech Program Talents. Furthermore, the work is supported by the government of the Austrian federal state of Styria within the project “CUBA– Contextual User Behavior Analysis”.